It is sometimes more convenient to control the raspberry pi robot platform from the smartphone.

|

| Raspberry Pi robot platform with smartphone HTML RC control |

Perhaps it is because I am now lugging around a 17" Asus X751L laptop.

There are several options. The quickest way is to ssh into the Raspberry Pi using an app like

Juicessh. I can then reuse the C programs forward, left and right.

This turned out to be quite limiting, especially at my age. The smartphone keyboard and screen are really too small for the Linux command line interface to be used for a useful length of time.

But that's what rapid prototyping is all about. We build another one as fast as possible, preferably with available resources. Time for the next iteration.

Rather typing a separate command (in this case a bash script) perhaps it is better to make a program to issue direction commands on a single key press. This takes us to the C program arrowkeys.c

The process is really quite quick and simple: I just googled for the code, starting with general terms lile 'linux', 'keypress', 'C language'. C just happened to be the language I am most familiar with. 'python' or your favoorite language should work just as well.

This search leads me to ncurses, the library that I need. We refine the search further by adding 'sample' and 'code', and this time google leads us to to the function I need, wgetch. This in turn leads us to the article '

A Simple Key Usage Example'. I copied the code wholesale, and just had to add the C library stlib.h so that the keypress code can use system() to invoke my direction programs.

There is the program. You compile it thus:

gcc -lncurses -o arrowkeys arrowkeys.c

/******************************************************************************

* *

* Simple program to issue motor commands in response to keyboard arrow keys *

* Copied from: *

* http://tldp.org/HOWTO/NCURSES-Programming-HOWTO/keys.html *

* *

* 2017-10-24 CM Heong *

* *

* *

* *

* *

* *

* *

* *

* *

*****************************************************************************/

#include <stdio.h>

#include <ncurses.h>

#include <stdlib.h>

#define WIDTH 30

#define HEIGHT 10

int startx = 0;

int starty = 0;

char *choices[] =

{

"Forwards",

"Back ",

"Left ",

"Right ",

"Exit",

};

int n_choices = sizeof(choices) / sizeof(char *);

void print_menu(WINDOW *menu_win, int highlight);

int main()

{

WINDOW *menu_win;

int highlight = 1;

int choice = 0;

int c;

initscr();

clear();

noecho();

cbreak(); /* Line buffering disabled. pass on everything */

startx = (80 - WIDTH) / 2;

starty = (24 - HEIGHT) / 2;

menu_win = newwin(HEIGHT, WIDTH, starty, startx);

keypad(menu_win, TRUE);

mvprintw(0, 0, "Use arrow keys to go up and down, Any other key to exit");

refresh();

print_menu(menu_win, highlight);

while(1)

{

c = wgetch(menu_win);

switch(c)

{

case KEY_UP:

highlight=1;

system("/home/heong/piface/piface-master/c/forward 100");/*2017-10-31*/

break;

case KEY_DOWN:

highlight=2;

break;

case KEY_LEFT:

highlight=3;

system("/home/heong/piface/piface-master/c/left 100");/*2017-10-31*/

break;

case KEY_RIGHT:

highlight=4;

system("/home/heong/piface/piface-master/c/right 100");/*2017-10-31*/

break;

default:

highlight=n_choices; /* exit */

break;

}

print_menu(menu_win, highlight);

if(highlight==n_choices) /* Exit chosen - out of the loop */

break;

}

mvprintw(23, 0, "You chose choice %d with choice string %s\n", choice, choices[choice - 1]);

clrtoeol();

refresh();

endwin();

return 0;

}

void print_menu(WINDOW *menu_win, int highlight)

{

int x, y, i;

x = 2;

y = 2;

box(menu_win, 0, 0);

for(i = 0; i < n_choices; ++i)

{ if(highlight == i + 1) /* High light the present choice */

{ wattron(menu_win, A_REVERSE);

mvwprintw(menu_win, y, x, "%s", choices[i]);

wattroff(menu_win, A_REVERSE);

}

else

mvwprintw(menu_win, y, x, "%s", choices[i]);

++y;

}

wrefresh(menu_win);

}

Now arrowkeys worked great on the laptop. But on the smartphone, I had to take my eyes off the robot and look at the screen, and this gets wearing very quickly as there a

a lot of motion commands.

What I need is just 3 very big buttons, for forwards, left and right. The obvious way is to use an App, perhaps even write one using the MIT App Inventor.

At this point my smartphone,a Nexus 5 failed. I quickly reverted to my trusty Nexus 1, but in my haste to install the SIM card, I broke the phone's SIM connector. The second backup is a Leonovo A390, which did not have much disk space left after installing Whatsapp.

But we can work around this: if I ran a website from the Raspberry Pi, I can invoke the C motion programs from php, via the function exec(), very similar to the trusty system() function.

This means however I have to come up with the button program in html. The added advantage is it will work both in the laptop and the smartphone.

As you suspected, it is back to google. 'html', 'button' and 'system' eventually led me to the 'form', 'exec' keywords. From there the search narrows down to

w3schools.

Not knowing web programming should not stop you: it didn't stop me. If you have a background in at least one computer language you should get by. The result would not be pretty code, but you will have a workable (well, sort of) prototype you can show as plausible progress.

From there on, a full day's

frobbing to get the buttons screen just right, in the process discovering CSS 'style' in the process. The resulting program, robot.html and upbutton.php:

$cat robot/piface/piface-master/robot2/robot.html

<!-- \/ starthtml -->

<html>

<head>

<META HTTP-EQUIV="Content-Type" CONTENT="text/html; charset=iso-8859-1">

<META NAME="keywords" CONTENT="Heong Chee Meng electronics engineer Android Raspberry Pi Robot Platform Control">

<META NAME="description" CONTENT="Heong Chee Meng's robot control website.">

<META NAME="author" CONTENT="Heong Chee Meng">

<TITLE>Heong's Robotic Control Website</TITLE>

</head>

Heong's Robot Platform Control Website

<p style="float: left; width: 33.3%; text-align: center">

<form action="upbutton.php" method="post">

<button type="submit" name="buttonu" value="Connect"><img width="180" height="180" alt="Connect" src="./upbutton.svg" align="center"></button>

<! </form>

<p>

<p style="float: left; width: 33.3%; text-align: left">

<form action="leftbutton.php" method="post">

<button type="submit" name="buttonl" value="Connect"><img width="180" height="180" alt="Connect" src="./leftbutton.svg" align="left"></button>

<! </form>

<p style="float: right; width: 33.3%; text-align: right">

<form action="rightbutton.php" method="post">

<button type="submit" name="buttonr" value="Connect"><img width="180" height="180" alt="Connect" src="./rightbutton.svg" align="right"></button>

<form action="bleh.php" method="post">

</form>

<p>

</BODY>

</html>

<!-- /\ end html -->

$cat robot/piface/piface-master/robot2/upbutton.php

<html>

<body>

<article>

<?php

if (isset($_POST['buttonu'])) {

$result = shell_exec('ls -l upbutton.svg');

echo "Done!<pre>$result</pre>";

}

if (isset($_POST['buttonl'])) {

$result = shell_exec('ls -l leftbutton.svg');

echo "Done!<pre>$result</pre>";

}

if (isset($_POST['buttonr'])) {

$result = shell_exec('ls -l rightbutton.svg');

echo "Done!<pre>$result</pre>";

}

?>

</article>

</body>

</html>

You can get the button images from

here. Put the files into a subdirectory 'robot' in your apache directory, which in Slackware should be:

/var/www/htdocs/robot/

You start the webserver with

chmod

+x /etc/rc.d/rc.httpd

/etc/rc.d/rc.httpd restart

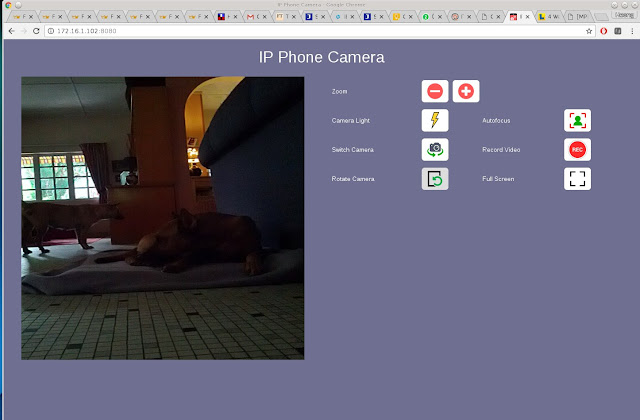

The next step would be to have the phone and the Raspberry Pi log into the same wifi hotspot. This is straightforward if you are at home, just have both your Pi and smartphone log into your wifi hotspot.

If you are on the move (say you need to demo your new prototype at a client's place), just set your smartphone to be a wifi hotspot and have Raspberry Pi connect to it.

If you use static IP (say your Pi is 172.16.1.1) or if you use a DHCP server, the command

dhclient -v wlan0

will display the Pi's IP.

From there it is just a matter of typing in the link into your favourite browser - I used Firefox:

http://172.16.1.1/robot/robot.html

By now I have a spanking new Samsung Note 5, which unhappily was slimmer than the stack of RM50 bank notes needed to buy it.

After playing RC car with the robot (I've

always wanted one!) for a while it would be nice to be able to do this

remotely, like, over the Internet. This would have

telepresence features. Happily, you just need to configure your home wifi modem router to host the Raspberry Pi website, and the same setup should work.

Now that I have the Note 6 I just needed to complete my search for the Android App to do ssh commands with big buttons. The first hit for 'ssh', 'button', 'app' turned up

HotButton SSH. Now this should be a lot less painful to get working than html and the only damage would be to my pride.

The HTML version's advantage is that it is the same whether you used a desktop, laptop or a smartphone. Best of all, it is easy to link up as an Internet of Things (IoT) device. If you were deploying an interface for, say an MRT SCADA system for both the Station Control Room as well as mobile workers this would be an advantage, especially with training costs.

The moral here is rapid prototyping simply uses the resources

on hand. Time is the essence- often it is much easier to find out

the faults if you (or the customer) have some form of the prototype

to work on. The individual motion programs were not suitable for

repeated use, and arrowkeys.c although easy to do (with my skill-set)

proved OK for laptop use, but using a laptop proved inconvenient.

It sounds trendy. Indeed

DevOps uses a similar approach for its 'Continuous Delivery' portion, but this approach would sound familiar in the US during the Great Depression.

Happy trails.